On the New Nature of Reality

Imagine coming across this picture on the internet: A person is viciously hitting an old lady, who is leaning back on her walker for support, face horrified. A stream of blood flows from her nose while the perpetrator gleefully mugs for the camera, proud of the abuse.

That perpetrator is you.

You can’t believe it. You’ve never done anything like this in your life — wouldn’t even think of doing such a thing. You must have a doppelgänger somewhere. But no, the closer you look, you just know it’s actually you.

Such is the level of credibility we have arrived at with the latest Artificial Intelligence image generators. Photorealistic images of people and events that simply don’t exist are being created every second, and with each iteration of the technology, it becomes exponentially harder to distinguish reality from…whatever this is.

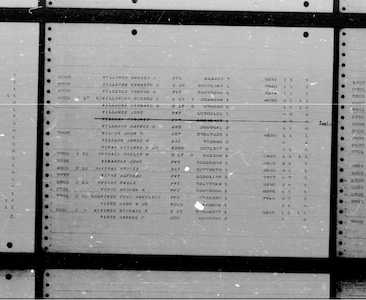

Check out the “Another America” project by photographer/artist Phillip Toledano. He has painstakingly created, through his interactions with AI, photos that reflect a mid-20th century Manhattan that never existed, but very much looks like it did. A massive sinkhole where an office building once stood. Pollution jellyfish flying over the avenues. Wolves prowling the streets of New York. WIth the help of a brilliant writer friend, even a false history has been created to accompany some of these photos. Without a pre-existing reference point of New York and its history, how would one know what’s true? Of course, this is exactly his point.

Now, let’s get back to that photo of you beating a defenseless elder. Without any pre-existing knowledge of you or your character, how would a jury know that it simply is not you in that AI-generated image? Furthermore, how unfair is it that you could even be put in this position?

Remember this the next time you’re quick to judge [insert your least favorite political figure here] based on an image that seems too bad (or good) to be true. Remember this the next time you “like” or “share” a deepfake video or modified image that feeds in to your own base desire to hurt others.

Because you may be next.

Wes Anderson on Lockdown

You May Also Like

Brevity

October 24, 2018

A Jewish Soldier in WWII

May 29, 2017